Responsive Learning Analytics in Large College Classrooms

Optimized quiz recommendations and individualized content delivery using sparse factor analysis and MIRT

Responsive Learning Analytics in Large College Classrooms

Optimized quiz recommendations and individualized content delivery using sparse factor analysis and MIRT

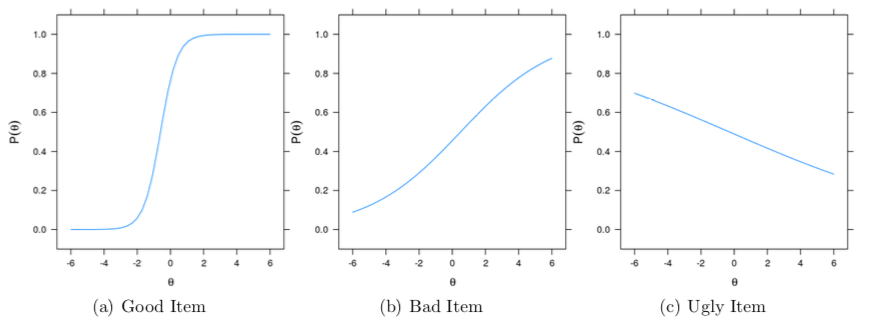

A large undergraduate course at the University of Texas implemented a new class structure that replaces high-stakes tests with daily quizzes administered during class via computer. I propose an innovation to optimize content delivery to improve learning outcomes. Given graded student quiz data from one semester of this course, I use multidimensional item response theory (MIRT) and sparse factor analysis (SPARFA) to jointly estimate concepts underlying the items and each students’ mastery of these concepts, demonstrating the efficacy of this approach in real-world example.

After comparing these methods, I explore free-response and student chat data using basic natural language processing. Techniques from learning analytics can help realize the full potential of spaced retrieval practice in the classroom by optimizing the selection of repeated items so as to target remediation. Furthermore, such techniques can be used to introduce variability into retrieval practice, encouraging a deeper understanding of the content which is more likely to transfer to novel problems.

Retrieving and Applying Knowledge to Different Examples Promotes Transfer of Learning

Testing yourself with different examples of a concept improves transfer of concept to novel context

Retrieving and Applying Knowledge to Different Examples Promotes Transfer of Learning

Testing yourself with different examples of a concept improves transfer of concept to novel context

Introducing variability during learning can facilitate transfer to new contexts (i.e., generalization). We explore the concept of variability in an area of research where its effects have received little attention: learning through retrieval practice. In four experiments, we investigate whether retrieval practice with different examples of a concept promotes greater transfer than repeated retrieval practice with the same example. All four experiments showed that variability during retrieval practice produced superior transfer of knowledge to new examples. Experiments 3 and 4 also showed a testing effect and a benefit of studying different examples. Overall, findings suggest that repeatedly retrieving and applying knowledge to different examples is a powerful method for acquiring knowledge that will transfer to a variety of new contexts

Educational Practices in Large College Classrooms— What Really Goes On?

Exploratory analyses mining data from 1100+ college syllabi

Educational Practices in Large College Classrooms— What Really Goes On?

Exploratory analyses mining data from 1100+ college syllabi

A large-scale characterization of normative educational practices (e.g., course structure, teaching methods, learning activities) across more than 1,000 high-enrollment undergraduate courses at a large public institution over the last 5 years. I assess the extent to which course features reflect educational best-practices by systematically reviewing course syllabi—documenting the type, quantity, and grade- weight of all work assigned in each course as well as the prevalence and variability of teaching practices such as group activities, retrieval practice, and in-class active learning. I assess the degree to which these variables have changed over time, how they differ across colleges, and whether they form distinct clusters.

I also analyze language used in the syllabus to see how instructors communicate information to students. I examine pronouns, comparisons, negations, and words related to achievement versus affiliation; I performance sentiment analysis for each syllabus; I isolate words that unique to certain syllabi, courses, departments, and colleges; and I look at how similar two syllabi from the same course are on average.

Findings revealed that high stakes exams are the norm, active learning is relatively uncommon, and students get few opportunities for spaced retrieval practice. Importantly, it was found that no one college has a monopoly on educational best-practices; different colleges had different strengths. Trends over time were mostly positive, indicating an increase in adoption of many best-practices, with a few exceptions.

Estimating the Causal Effect of Retrieval Practice on Future Course Performance

Does taking a class with frequent quizzing opportunities produce better retention and transfer of learning?

Estimating the Causal Effect of Retrieval Practice on Future Course Performance

Does taking a class with frequent quizzing opportunities produce better retention and transfer of learning?

This research builds directly upon my descriptive analysis (see below) by combining the syllabus dataset with student records to assess how prerequisite-course features affect student performance in their subsequent courses. Specifically, introductory courses high and low in retrieval-practice requirements were compared in terms of their students subsequent success using inverse propensity-score weighted logistic regressions with cluster-robust standard errors to improve causal inference. Results showed that additional retrieval practice improved students’ performance in their subsequent courses. However, the average treatment effect estimates were small and somewhat sensitive to variations in model. Finally, subsequent-course performance was regressed on the full set of educational relevant variables using lasso regularization, identifying a set of instructional variables related to retrieval practice and spacing (including number of quizzes and cumulative exams) as important correlates of student success.

Toward Consilience in The Use of Task-Level Feedback to Promote Learning

Synthesizing, unifying research on feedback best-practices

Toward Consilience in The Use of Task-Level Feedback to Promote Learning

Synthesizing, unifying research on feedback best-practices

A literature review and theoretical framework for understanding how people learn from feedback. Three main findings are situated within their respective theoretical accounts and then integrated: feedback messages should convey information about correctness, highlight the correct answer, and provide additional context for why the answer is correct; errors made with higher confidence are more likely to be corrected (the “hypercorrection effect”"), and the finding that delayed feedback produces better long-term learning than immediate feedback.

Teaching

I am an educator at heart and also by training

Teaching

I am an educator at heart and also by training

I am an expert in the science of learning and I have been plying my trade (i.e., teaching) in various capacities (high school classes, private tutoring, statistics short courses, training seminars, research consulting, college courses) for about 10 years now. Click to learn more about my pedagogical background and outlook.